Throwback to my first neural network

I built my first neural network back in 2020 during my transition from the academical world into industry. I was supervising a bachelor's thesis on the mathematics of neural networks, and wanted to do the nitty gritty details by hand. A very minimal example, and fitting to the field of CS in my mind, was to implement the logical XOR gate as a neural network:

| A | B | A XOR B |

|---|---|---|

| 0 | 0 | ⠀0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

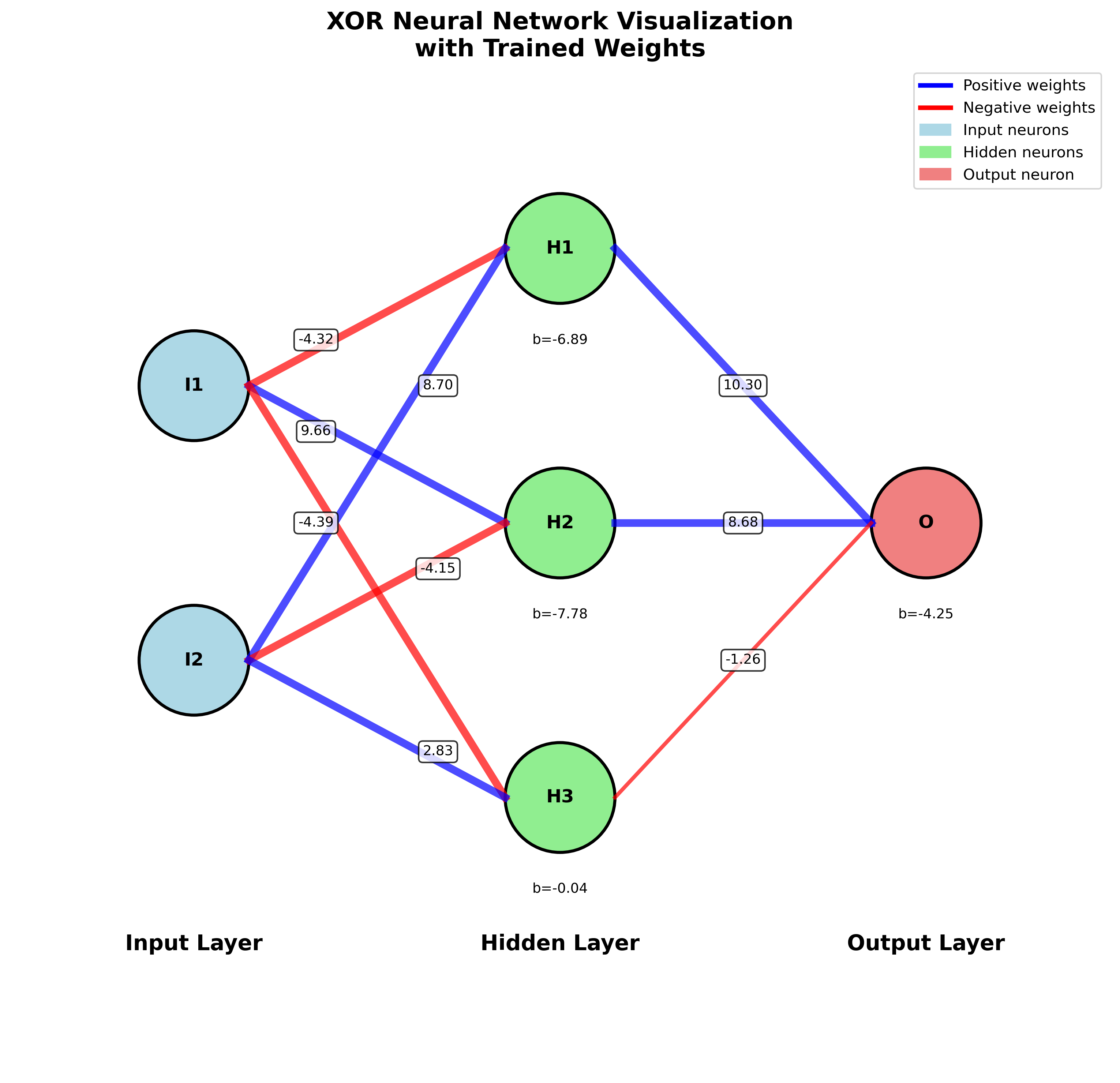

The XOR gate is not only the basic building block of computers, but also non-linear1 i.e. at least a bit non-trivial. On the other hand, a neural network with two inputs, a hidden layer of three neurons and one output is an architecture you can fit in your pocket. In a pinch, you could even calculate by hand a set of weights that would solve the task. This made troubleshooting much easier.

Back then I was still doing a considerable portion of my programming with MatLab/octave. So I designed all of it on paper, deriving the necessary differentials and whatnot by hand and then simply implemented everything in Matlab/octave. The results are here in my github.

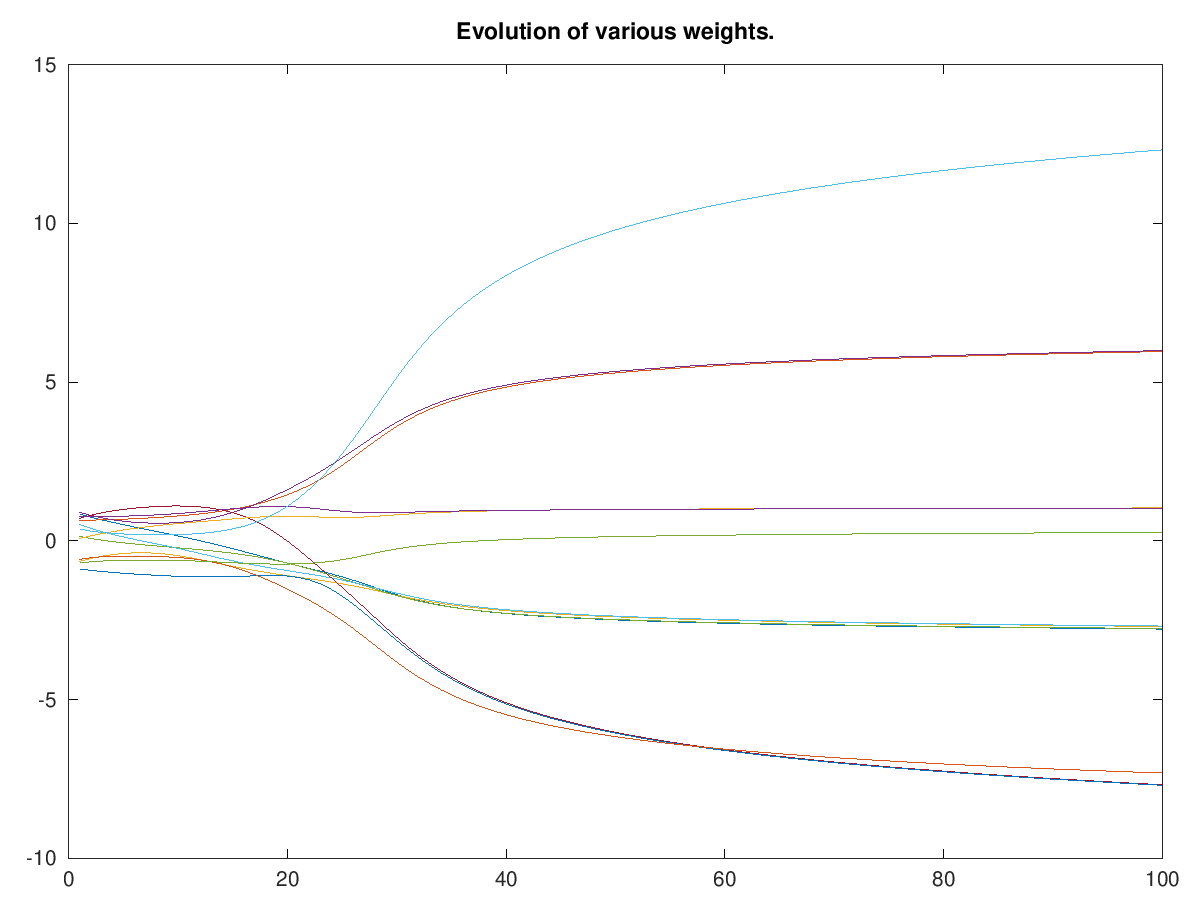

With such a small network we can actually plot the evolution of all the weights in the system.

We2 can also quite easily draw the final architecture of the system.

I find it sort of fun to look through all the four cases and try to figure out what the learned logic is. (Remember that we use the standard sigmoid function in all the neurons, which sends all big numbers to 1 and small numbers to 0.

I find it sort of fun to look through all the four cases and try to figure out what the learned logic is. (Remember that we use the standard sigmoid function in all the neurons, which sends all big numbers to 1 and small numbers to 0.

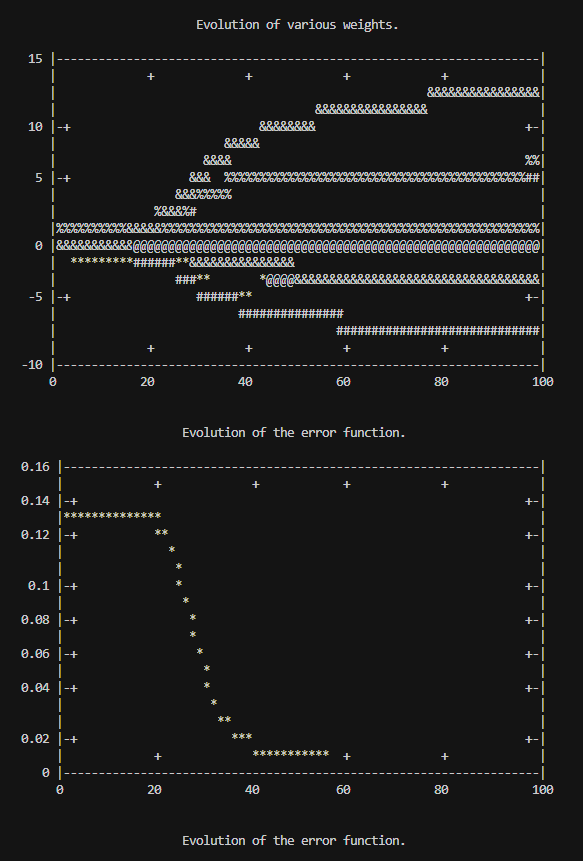

After five+ years of building actual AI systems, I find this exceedingly cute, and would put on my fridge door. Also, I haven't used matlab in also about 5 years, but now when I reran the system after all these years, it was fun to note that when ran without a graphical UI, Matlab/octave will produce ascii plots:

These kinds of "Dwarf Fortress plots" are something I would definitely appreriate in Python as well.